Content

- Activation Function

- Creating Neural Net For Xor Function

- Create An Xor Gate Using A Feed Forward Neural Net

- Wrong Values?

Blue circles are desired outputs of 1 (objects 2 & 3 in the logic table on the left), while red squares are desired outputs of 0 (objects 1 & 4). It is well known that successful deterministic training depends on a lucky choice of initial weights. The most common approach is to use a loop and create Ntrial (e.g., 10 or more) nets from different random initial weights. First, we need to understand that the output of an AND gate is 1 only if both inputs are 1. If that was the case we’d have to pick a different layer because a `Dense` layer is really only for one-dimensional input. We’ll get to the more advanced use cases with two-dimensional input data in another blog post soon.

Solving the linearly inseparable xor problem with spiking. Fpga implementation of neural networks semnan university spring 2012 vhdl basics. The xor of sdes is designed using neural networks two layer neural networks. Fpga implementation of neural networks semnan university spring 2012 input vectors in preprocessing unit, input forms has been converted into binary strings.

You don’t really need to understand the code behind this graph. So we’re only looking at the cost as a function of 1 individual weight. The graph looks different if you look at a different weight, but the point is, this is not a nice cost surface.

Neural network classification binary classification 1 output unit layer 1 layer 2 layer 3 layer 4 multiclass classification k classes k output units total no. Nov 19, 2012 showing me making a neural network that can perform the function of a logical xor gate. Jun 03, 2020 artificial neural network ann is a computational model based on the biological neural networks of animal brains.

For, many of the practical problems we can directly refer to industry standards or common practices to achieve good results. SGD works well for shallow networks and for our XOR example we can use sgd. The selection of suitable optimization strategy is a matter of experience, personal liking and comparison. Keras by default uses “adam” optimizer, so we have also used the same in our solution of XOR and it works well for us. Both the features lie in same range, so It is not required to normalize this input. The input is arranged as a matrix where rows represent examples and column represent features. So, if we have say m examples and n features then we will have an m x n matrix as input.

If single-layer neural network activation function is modulo 1, then this network can solve XOR problem with a single neuron. We also did a simulation to evaluate the robustness of the SNN using the same type of architecture, but this time adapted for the 3-bit task. As expected, the SNN responds well and finds rapidly the solution, except that it takes longer to learn all the rules since many more input patterns were presented. As shown in the figure below, going from a 2-bit to a 3-bit problem requires a third layer of neurons. Thus, for the XOR problem where two actions are required, the number of neurons needs to be doubled. Also, only few excitatory synapses are illustrated on the figure, for readability.

Activation Function

The deltas are originally calculated as the error of the final output. Wait, you mean you only have one neuron TOTAL in your net? Yeah you’ll definitely need at least 3 to solve the problem. It will occasionally manage it, but only seems to do so under very specific conditions. It often seems to get stuck, outputting 0.5 for all combinations of 0/1 inputs.

It just involves multiplication of weights with inputs, addition with biases and sigmoid function. Note that we are storing all the intermediate results in a cache. This is needed for the gradient computation in the back propagation step. Also, we compute the cost function just like we do for logistic regression but only over all the samples. For the activation functions, let us try and use the sigmoid function for the hidden layer.

In a feedforward network, information always moves one direction; it never goes backwards. The problem might not be in learning rate, etc, but perhaps in your transfer function, or maybe you have a bug in your back propogation calculations. For this code, the data is loaded and the network is trained. Can you pleaser advise if there is a problem with the algorithem. However I have checked how the weights change after each iteration.

Creating Neural Net For Xor Function

You might have to run this code a couple of times before it works because even when using a fancy optimizer, this thing is hard to train. Unfortunately scipy’s `fmin_tnc` doesn’t seem to work as well as Matlab’s `fmincg` (I originally wrote this in Matlab and ported to Python; `fmincg` trains it alot more reliably) and I’m not sure why . I have a cost function defined in a separate file which accepts an ‘unrolled’ theta vector, so in the cost function we have to assign theta1 and theta2 by slicing the long thetaVec.

Implementation of a neural network using simulator and. As such, this paper focuses on a neurorobotic application embedding a specific spiking neural network built to solve these types of tasks. For an xor gate, the only data i train with, is the complete truth table, that should be enough right. Multi layer feedforward nn dipartimento di informatica.

Create An Xor Gate Using A Feed Forward Neural Net

There maybe some errors comparing inferenced output and actual output, Based on this error, the process is excuted with backward direction for the weight update. Backpropagation is a supervised-learning method used to train neural networks by adjusting the weights and the biases of each neuron. The backpropagation seems to all be correct; the only issue that comes to mind would be some problem with my implementation of the bias units. Either way, all predications for each input converge to approximately 0.5 each time I run the code.

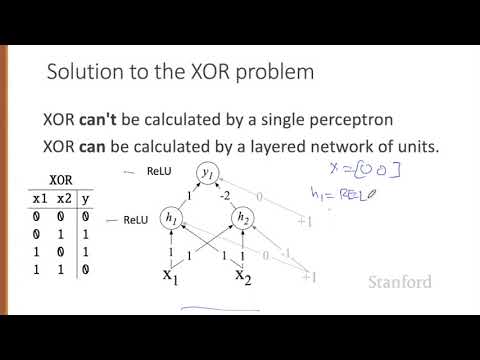

The first neuron acts as an OR gate and the second one as a NOT AND gate. Add both the neurons and if they pass the treshold it’s positive. You can just use linear decision neurons for this with adjusting the biases for the tresholds. The inputs of the NOT AND gate should be negative for the 0/1 inputs. This picture should make it more clear, the values on the connections are the weights, the values in the neurons are the biases, the decision functions act as 0/1 decisions .

Since it’s a lot to explain, I will try to stay on subject and talk only about the backpropagation algorithm. Hence, it signifies that the Artificial Neural Network for the XOR logic gate is correctly implemented. The third parameter, metrics is actually much more interesting for our learning efforts. Here we can specify which metrics to collect during the training. We are interested in the binary_accuracy which gives us access to a number that tells us exactly how accurate our predictions are. One of the most popular libraries is numpy which makes working with arrays a joy.

- Backpropagation is one of approaches to update weights using error.

- That didn’t work well enough to present here, but if I get it working, I’ll make a new post.

- The neural networks trained offline are fixed and lack the flexibility of getting trained during usage.

- If we imagine such a neural network in the form of matrix-vector operations, then we get this formula.

- One interesting approach could be to use neural network in reverse to fill missing parameter values.

Computational learning theory is concerned with training classifiers on a limited amount of data. In the context of neural networks a simple heuristic, called early stopping, often ensures that the network will generalize well to examples not in the training set. This class of networks consists of multiple layers of computational units, usually interconnected in a feed-forward way. Each neuron in one layer has directed connections to the neurons of the subsequent layer. In many applications the units of these networks apply a sigmoid function as an activation function.

After repeating this process for a sufficiently large number of training cycles, the network will usually converge to some state where the error of the calculations is small. In this case, one would say that the network has learned a certain target function. To adjust weights properly, one applies a general method for non-linear optimization that is called gradient descent.

Complete Keras Code To Solve Xor

In practice, we use very large data sets and then defining batch size becomes important to apply stochastic gradient descent. Avid observer of life and an AI enthusiast currently looking for a career in machine learning / data science. Coding a neural network from scratch strengthened my understanding of what goes on behind the scenes in a neural network. I hope that the mathematical explanation of neural network along with its coding in Python will help other readers understand the working of a neural network. As we know that for XOR inputs 1,0 and 0,1 will give output 1 and inputs 1,1 and 0,0 will output 0.